The post Download the 2021 Linux Foundation Annual Report appeared first on Linux.com.

]]>

In 2021, The Linux Foundation continued to see organizations embrace open collaboration and open source principles, accelerating new innovations, approaches, and best practices. As a community, we made significant progress in the areas of cloud-native computing, 5G networking, software supply chain security, 3D gaming, and a host of new industry and social initiatives.

Download and read the report today.

The post Download the 2021 Linux Foundation Annual Report appeared first on Linux.com.

]]>The post State of the Open Mainframe 2021 appeared first on Linux.com.

]]>The mainframe is a foundational technology that has powered industries for decades, including government, financial, healthcare, and transportation. With the help of surrounding communities, the technologies built around this platform have paved the way for the emergence of a new set of technologies we see deployed today. Notably, a significant number of mainframe technologies are profoundly embracing open source.

The genesis of the open mainframe community

The mainframe has a tradition of having an open user community going back to SHARE in the 1950s. A group of mainframe technologists came together in Los Angeles, California, to share tips, insights, and, yes, code for the newly released IBM 701 computer system. SHARE was very likely the first open source software community.

See Below: Open Mainframe Summit Playlist (35 videos)

Over the years, this user group met regularly to share and collaborate on using the IBM 701 and subsequent systems. The “code” that came together was freely shared between mainframe operators and developers. As the years passed, it was quickly realized that there was a need to curate this code into a repository that others in the industry could use.

Arnie Casinghino, Circa 2011

Arnold “Arnie” Casinghino was one of the first to recognize the need to collaborate. In 1975, he began to curate scripts and tools into the CBT Tape project (CBT standing for the name of Arnie’s then-employer, the now-defunct Connecticut Bank and Trust Company). Interested users at that time would send Arnie a letter with a few dollars to request a tape, a method of distribution that carries on to today even though most users download the latest release from their website.

Casinghino’s vision culminated into a project that continues today and is now hosted at the Open Mainframe Project under the leadership of Sam Golab.

Linux comes to the mainframe

As Linux began to take the world by storm in the 1990s, a small group of mainframe enthusiasts started experimenting with Linux on IBM System 390 (a previously current generation of mainframe hardware). Over the last 20 years, others like Hitachi and Fujitsu also invested in enabling open source and Linux on their mainframe platforms. Linux on mainframe marked its official start on December 18, 1999, with IBM publishing a collection of patches and additions to the Linux 2.2.13 kernel.

The year 2000 brought momentum to Linux on the mainframe. The first true “Linux distribution” for these systems came in early 2000 as a collaboration between Marist College in Poughkeepsie, N.Y., and Think Blue Linux by Millenux in Germany. By October of that year, SUSE became the first vendor-supported Linux Distribution, in the first release of what’s now known as SUSE Enterprise Linux. SUSE’s first s390x distro represented an early example of mainframe leading the way in the evolution of computing technology.

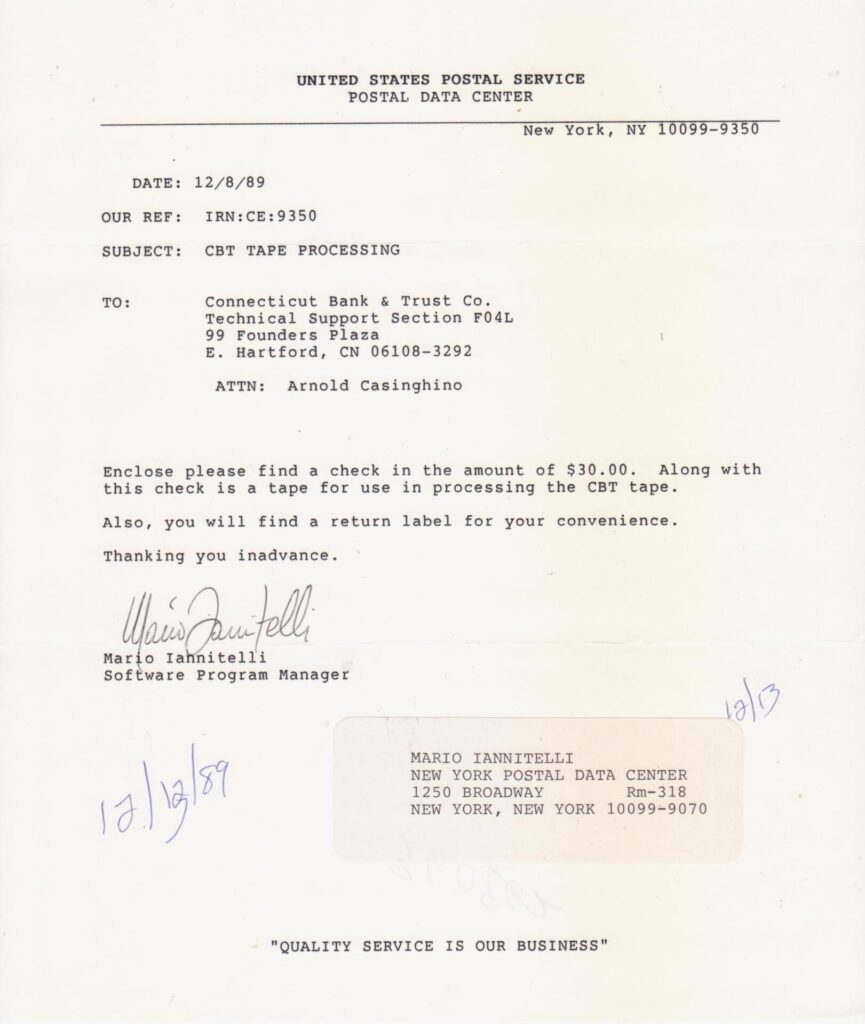

Today, nine known Linux distributions currently provide an s390x architecture variant.

Source: https://landscape.openmainframeproject.org/open-source?zoom=200

The expansion of the mainframe as a platform for Linux continues to be nurtured in the Open Mainframe Project, with key projects outlined below helping Linux on the mainframe continue to be a platform used by Fortune 100 companies worldwide.

- Feilong, which provides an interface between z/VM (the primary hypervisor for mainframe, is directly based on technology and concepts dating back to the 1960s) and modern cloud stack systems such as OpenStack, is jointly developed by IBM, SUSE, and others.

- Tessia is a tool that automates and simplifies the installation, configuration, and testing of Linux systems running on the Z platform.

Developments in COBOL

COBOL, which stands for “Common Business-Oriented Language,” is a compiled, English-like computer programming language developed for use as a business applications language. Its roots go back to the 1950s, and COBOL is still frequently used in many industries for key applications.

The COVID-19 pandemic in April 2020 put high levels of stress on various government services due to the unprecedented number of unemployment applications and other similar needs. This put the spotlight on COBOL, as it was then the predominant technology used for these systems. This also highlighted the perceived lack of talent to support these systems, which have code going back to the 1960s.

The vast COBOL and mainframe communities quickly addressed this need and made several efforts to provide a sustainable home for COBOL.

- Calling all COBOL Programmers Forum – an Open Mainframe Project forum where developers and programmers who would like to volunteer can post their profiles or are available for hire. Whether they are actively looking for employment, retired skilled veterans looking to stay involved, students who have completed COBOL courses, or are professionals wanting to volunteer, the forum offers the opportunity for job seekers to specify their level of expertise and availability to assist. Employers can then connect with these individuals as needed.

- COBOL Technical Forum – a new forum created specifically to address COBOL technical questions in which experienced COBOL programmers monitor activity. The forum allows all programmers to quickly learn new techniques and draw from a broad range of community expertise to address common questions and challenges exacerbated during this unprecedented time.

- Open Source COBOL Training – the Open Mainframe Project Technical Advisory Council has approved hosting a new open source project that will lead collaborative efforts to create training materials on COBOL. The courseware was contributed by IBM based on its work with clients and institutions for higher education and is provided under an open source license.

These initiatives were followed by a formal COBOL Working Group established later in 2020 to address the long-term challenges in building a sustainable COBOL ecosystem.

In early 2021, attention turned to the tooling ecosystem for COBOL developers with the launch of the COBOL Check project. This initiative enables test-driven development (TDD) practices for COBOL by providing a unit testing framework.

Zowe brings together the industry leaders to drive the future development paradigms of the mainframe

Traditionally, organizations have been challenged by integrating mainframe applications and data with the other systems that power their enterprise. This integration task further created a talent development challenge, as the paradigms between mainframe and other enterprise computing systems differed enough to make skills not easily transferable.

Broadcom, IBM, and Rocket Software saw this challenge and independently developed various frameworks to close this gap with the mainframe development experience. These include:

- An API Mediation Layer for standardizing the API experience for mainframe applications and services

- A CLI tool that could be run on a developer’s laptop or other non-mainframe systems and used for DevOps tooling integration.

- A Web Desktop interface to make it easier to develop web-based applications that leverage mainframe services and data using common development toolkits.

These components came together in August 2018 in Zowe, which was the first open source project launched that targeted the z/OS operating system (the predominant operating system on mainframe systems). The intention of bringing this project into the vendor-neutral Open Mainframe Project was to establish Zowe as the dominant development and integration tool for mainframe systems, aligning the mainframe community around Zowe.

After Zowe 1.0 was released in February 2019, the project quickly turned to enable a downstream ecosystem of vendor offerings to flourish by establishing the Zowe Conformance Program in August 2019. To date, there are more than 50 Zowe Conformant offerings from 6 different vendors in the mainframe industry.

In addition, Zowe has brought new projects into its scope, with the following incubator projects as of August 2021:

- ZEBRA, which provides re-usable and industry compliant JSON formatted RMF/SMF data records so that many other ISV SW and users can exploit them using open-source SW in many ways (contributed by Vicom Infinity).

- Workflow WiZard helps developers and systems programmers simplify the generation and management of z/OSMF workflows (contributed by BMC).

Zowe boasts more than 300 contributors with more than 34,000 contributions as of August 2021.

Mentorship to support the mainframes of tomorrow

One of the initial initiatives of the Open Mainframe Project was to establish a path to onboard students into the mainframe community, aligning with the current interest in open source development. Additionally, with the growth of open source on the platform, there was a need for maintainers for these projects with mainframe skills.

The Open Mainframe Project launched its first mentorship program in 2016, with seven students making contributions to the open source ecosystem on the mainframe. To date, more than 50 mentees have participated in this program, making important contributions to projects such as:

- Alpine Linux, resulting in the port for the s390x architecture.

- HyperLedger

- Kubernetes

- CloudFoundry

- OpenStack

- And many of the hosted projects at the Open Mainframe Project and beyond

This summer, the Open Mainframe Project welcomed a record 14 mentees across the globe that had mentors in several projects including a few new ones such as ATOM, COBOL Programming Course, COBOL Working Group, Mainframe Open Education, Polycephaly, Software Discovery Tool, and Zowe.

The mentorship program has enabled these students to become part of the future mainframe talent, with mentorship graduates now in developer roles at ADP, IBM, SUSE, and others.

The future is bright for the mainframe

The mainframe has seen a resurgence in the past five years, with the launch of the Open Mainframe Project and the industry coming together in key open source projects in the COBOL, Linux on System Z, and z/OS ecosystems. The Open Mainframe Project hosts more than 20 projects and working groups supported by over 45 organizations as of August 2021, with no signs of slowing anytime soon.

Read more about the Open Mainframe Project in the 2020 annual report, and join us at Open Mainframe Summit on September 22-23, 2021.

The post State of the Open Mainframe 2021 appeared first on Linux.com.

]]>The post In the trenches with Thomas Gleixner, real-time Linux kernel patch set appeared first on Linux.com.

]]>

Jason Perlow, Editorial Director at the Linux Foundation interviews Thomas Gleixner, Linux Foundation Fellow, CTO of Linutronix GmbH, and project leader of the PREEMPT_RT real-time kernel patch set.

JP: Greetings, Thomas! It’s great to have you here this morning — although for you, it’s getting late in the afternoon in Germany. So PREEMPT_RT, the real-time patch set for the kernel is a fascinating project because it has some very important use-cases that most people who use Linux-based systems may not be aware of. First of all, can you tell me what “Real-Time” truly means?

TG: Real-Time in the context of operating systems means that the operating system provides mechanisms to guarantee that the associated real-time task processes an event within a specified period of time. Real-Time is often confused with “really fast.” The late Prof. Doug Niehaus explained it this way: “Real-Time is not as fast as possible; it is as fast as specified.”

The specified time constraint is application-dependent. A control loop for a water treatment plant can have comparatively large time constraints measured in seconds or even minutes, while a robotics control loop has time constraints in the range of microseconds. But for both scenarios missing the deadline at which the computation has to be finished can result in malfunction. For some application scenarios, missing the deadline can have fatal consequences.

In the strict sense of Real-Time, the guarantee which is provided by the operating system must be verifiable, e.g., by mathematical proof of the worst-case execution time. In some application areas, especially those related to functional safety (aerospace, medical, automation, automotive, just to name a few), this is a mandatory requirement. But for other scenarios or scenarios where there is a separate mechanism for providing the safety requirements, the proof of correctness can be more relaxed. But even in the more relaxed case, the malfunction of a real-time system can cause substantial damage, which obviously wants to be avoided.

JP: What is the history behind the project? How did it get started?

TG: Real-Time Linux has a history that goes way beyond the actual PREEMPT_RT project.

Linux became a research vehicle very early on. Real-Time researchers set out to transform Linux into a Real-Time Operating system and followed different approaches with more or less success. Still, none of them seriously attempted a fully integrated and perhaps upstream-able variant. In 2004 various parties started an uncoordinated effort to get some key technologies into the Linux kernel on which they wanted to build proper Real-Time support. None of them was complete, and there was a lack of an overall concept.

Ingo Molnar, working for RedHat, started to pick up pieces, reshape them and collect them in a patch series to build the grounds for the real-time preemption patch set PREEMPT_RT. At that time, I worked with the late Dr. Doug Niehaus to port a solution we had working based on the 2.4 Linux kernel forward to the 2.6 kernel. Our work was both conflicting and complimentary, so I teamed up with Ingo quickly to get this into a usable shape. Others like Steven Rostedt brought in ideas and experience from other Linux Real-Time research efforts. With a quickly forming loose team of interested developers, we were able to develop a halfway usable Real-Time solution that was fully integrated into the Linux kernel in a short period of time. That was far from a maintainable and production-ready solution. Still, we had laid the groundwork and proven that the concept of making the Linux Kernel real-time capable was feasible. The idea and intent of fully integrating this into the mainline Linux kernel over time were there from the very beginning.

JP: Why is it still a separate project from the Mainline kernel today?

TG: To integrate the real-time patches into the Linux kernel, a lot of preparatory work, restructuring, and consolidation of the mainline codebase had to be done first. While many pieces that emerged from the real-time work found their way into the mainline kernel rather quickly due to their isolation, the more intrusive changes that change the Linux kernel’s fundamental behavior needed (and still need) a lot of polishing and careful integration work.

Naturally, this has to be coordinated with all the other ongoing efforts to adopt the Linux kernel to the different use cases ranging from tiny embedded systems to supercomputers.

This also requires carefully designing the integration so it does not get in the way of other interests and imposes roadblocks for further developing the Linux kernel, which is something the community and especially Linus Torvalds, cares about deeply.

As long as these remaining patches are out of the mainline kernel, this is not a problem because it does not put any burden or restriction on the mainline kernel. The responsibility is on the real-time project, but on the other side, in this context, there is no restriction to take shortcuts that would never be acceptable in the upstream kernel.

The real-time patches are fundamentally different from something like a device driver that sits at some corner of the source tree. A device driver does not cause any larger damage when it goes unmaintained and can be easily removed when it reaches the final state bit-rot. Conversely, the PREEMPT_RT core technology is in the heart of the Linux kernel. Long-term maintainability is key as any problem in that area will affect the Linux user universe as a whole. In contrast, a bit-rotted driver only affects the few people who have a device depending on it.

JP: Traditionally, when I think about RTOS, I think of legacy solutions based on closed systems. Why is it essential we have an open-source alternative to them?

TG: The RTOS landscape is broad and, in many cases, very specialized. As I mentioned on the question of “what is real-time,” certain application scenarios require a fully validated RTOS, usually according to an application space-specific standard and often regulatory law. Aside from that, many RTOSes are limited to a specific class of CPU devices that fit into the targeted application space. Many of them come with specialized application programming interfaces which require special tooling and expertise.

The Real-Time Linux project never aimed at these narrow and specialized application spaces. It always was meant to be the solution for 99% of the use cases and to be able to fully leverage the flexibility and scalability of the Linux kernel and the broader FOSS ecosystem so that integrated solutions with mixed-criticality workloads can be handled consistently.

Developing real-time applications on a real-time enabled Linux kernel is not much different from developing non-real-time applications on Linux, except for the careful selection of system interfaces that can be utilized and programming patterns that should be avoided, but that is true for real-time application programming in general independent of the RTOS.

The important difference is that the tools and concepts are all the same, and integration into and utilizing the larger FOSS ecosystem comes for free.

The downside of PREEMPT_RT is that it can’t be fully validated, which excludes it from specific application spaces, but there are efforts underway, e.g., the LF ELISA project, to fill that gap. The reason behind this is, that large multiprocessor systems have become a commodity, and the need for more complex real-time systems in various application spaces, e.g., assisted / autonomous driving or robotics, requires a more flexible and scalable RTOS approach than what most of the specialized and validated RTOSes can provide.

That’s a long way down the road. Still, there are solutions out there today which utilize external mechanisms to achieve the safety requirements in some of the application spaces while leveraging the full potential of a real-time enabled Linux kernel along with the broad offerings of the wider FOSS ecosystem.

JP: What are examples of products and systems that use the real-time patch set that people depend on regularly?

TG: It’s all over the place now. Industrial automation, control systems, robotics, medical devices, professional audio, automotive, rockets, and telecommunication, just to name a few prominent areas.

JP: Who are the major participants currently developing systems and toolsets with the real-time Linux kernel patch set?

TG: Listing them all would be equivalent to reciting the “who’s who” in the industry. On the distribution side, there are offerings from, e.g., RedHat, SUSE, Mentor, and Wind River, which deliver RT to a broad range of customers in different application areas. There are firms like Concurrent, National Instruments, Boston Dynamics, SpaceX, and Tesla, just to name a few on the products side.

RedHat and National Instruments are also members of the LF collaborative Real-Time project.

JP: What are the challenges in developing a real-time subsystem or specialized kernel for Linux? Is it any different than how other projects are run for the kernel?

TG: Not really different; the same rules apply. Patches have to be posted, are reviewed, and discussed. The feedback is then incorporated. The loop starts over until everyone agrees on the solution, and the patches get merged into the relevant subsystem tree and finally end up in the mainline kernel.

But as I explained before, it needs a lot of care and effort and, often enough, a large amount of extra work to restructure existing code first to get a particular piece of the patches integrated. The result is providing the desired functionality but is at the same time not in the way of other interests or, ideally, provides a benefit for everyone.

The technology’s complexity that reaches into a broad range of the core kernel code is obviously challenging, especially combined with the mainline kernel’s rapid change rate. Even larger changes happening at the related core infrastructure level are not impacting ongoing development and integration work too much in areas like drivers or file systems. But any change on the core infrastructure can break a carefully thought-out integration of the real-time parts into that infrastructure and send us back to the drawing board for a while.

JP: Which companies have been supporting the effort to get the PREEMPT_RT Linux kernel patches upstream?

TG: For the past five years, it has been supported by the members of the LF real-time Linux project, currently ARM, BMW, CIP, ELISA, Intel, National Instruments, OSADL, RedHat, and Texas Instruments. CIP, ELISA, and OSADL are projects or organizations on their own which have member companies all over the industry. Former supporters include Google, IBM, and NXP.

I personally, my team and the broader Linux real-time community are extremely grateful for the support provided by these members.

However, as with other key open source projects heavily used in critical infrastructure, funding always was and still is a difficult challenge. Even if the amount of money required to keep such low-level plumbing but essential functionality sustained is comparatively small, these projects struggle with finding enough sponsors and often lack long-term commitment.

The approach to funding these kinds of projects reminds me of the Mikado Game, which is popular in Europe, where the first player who picks up the stick and disturbs the pile often is the one who loses.

That’s puzzling to me, especially as many companies build key products depending on these technologies and seem to take the availability and sustainability for granted up to the point where such a project fails, or people stop working on it due to lack of funding. Such companies should seriously consider supporting the funding of the Real-Time project.

It’s a lot like the Jenga game, where everyone pulls out as many pieces as they can up until the point where it collapses. We cannot keep taking; we have to give back to these communities putting in the hard work for technologies that companies heavily rely on.

I gave up long ago trying to make sense of that, especially when looking at the insane amounts of money thrown at the over-hyped technology of the day. Even if critical for a large part of the industry, low-level infrastructure lacks the buzzword charm that attracts attention and makes headlines — but it still needs support.

JP: One of the historical concerns was that Real-Time didn’t have a community associated with it; what has changed in the last five years?

TG: There is a lively user community, and quite a bit of the activity comes from the LF project members. On the development side itself, we are slowly gaining more people who understand the intricacies of PREEMPT_RT and also people who look at it from other angles, e.g., analysis and instrumentation. Some fields could be improved, like documentation, but there is always something that can be improved.

JP: What will the Real-Time Stable team be doing once the patches are accepted upstream?

TG: The stable team is currently overseeing the RT variants of the supported mainline stable versions. Once everything is integrated, this will dry out to some extent once the older versions reach EOL. But their expertise will still be required to keep real-time in shape in mainline and in the supported mainline stable kernels.

JP: So once the upstreaming activity is complete, what happens afterward?

TG: Once upstreaming is done, efforts have to be made to enable RT support for specific Linux features currently disabled on real-time enabled kernels. Also, for quite some time, there will be fallout when other things change in the kernel, and there has to be support for kernel developers who run into the constraints of RT, which they did not have to think about before.

The latter is a crucial point for this effort. Because there needs to be a clear longer-term commitment that the people who are deeply familiar with the matter and the concepts are not going to vanish once the mainlining is done. We can’t leave everybody else with the task of wrapping their brains around it in desperation; there cannot be institutional knowledge loss with a system as critical as this.

The lack of such a commitment would be a showstopper on the final step because we are now at the point where the notable changes are focused on the real-time only aspects rather than welcoming cleanups, improvements, and features of general value. This, in turn, circles back to the earlier question of funding and industry support — for this final step requires several years of commitment by companies using the real-time kernel.

There’s not going to be a shortage of things to work on. It’s not going to be as much as the current upstreaming effort, but as the kernel never stops changing, this will be interesting for a long time.

JP: Thank you, Thomas, for your time this morning. It’s been an illuminating discussion.

To get involved with the real-time kernel patch for Linux, please visit the PREEMPT_RT wiki at The Linux Foundation or email real-time-membership@linuxfoundation.org

The post In the trenches with Thomas Gleixner, real-time Linux kernel patch set appeared first on Linux.com.

]]>The post Open Source Block Storage for OpenNebula Clouds appeared first on Linux.com.

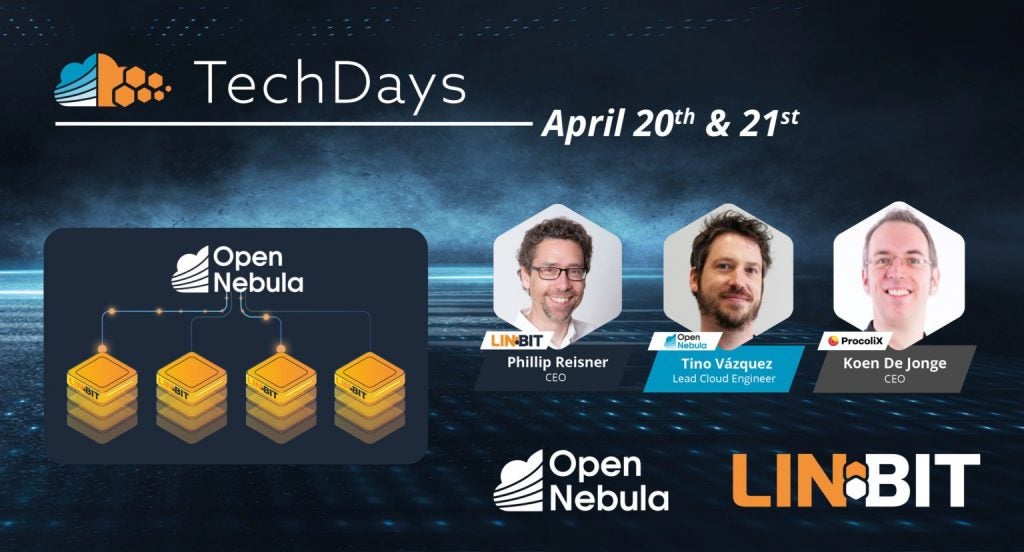

]]>The LINBIT OpenNebula TechDays is our mutual attempt to share our combined knowledge with the open source and storage community. We want to give you a thorough understanding of combining LINBIT’s software-defined storage solution with OpenNebula clouds.

OpenNebula is a powerful, but easy-to-use, open source platform to build and manage Enterprise Clouds. OpenNebula provides unified management of IT infrastructure and applications, avoiding vendor lock-in and reducing complexity, resource consumption, and operational costs. In contrast to OpenStack, which understands itself as a collection of independent projects, OpenNebula is an integrated solution that provides all the necessary components to manage a private, hybrid, or edge cloud.

LINBIT SDS is a software-defined storage solution for Linux that delivers highly-available, replicated block-storage volumes with exceptional performance. It matches perfectly with OpenNebula.

Both open source, both born in the Linux software ecosystem. LINBIT SDS is perfectly suited for a hyper-converged deployment with OpenNebula’s hypervisor nodes since it saves a lot on CPU and memory resources when you compare it to Ceph. At the same time, it delivers higher performance (IOPS and throughput) for single volumes and accumulated over all volumes of a cluster.

Find all webinars, case-studies, and discussions listed in the TechDays schedule. It is a free all-virtual event on April 20th and 21st, with direct access to LINBIT and OpenNebula experts.

See you there!

The post Open Source Block Storage for OpenNebula Clouds appeared first on Linux.com.

]]>The post OpenPOWER Foundation Provides Microwatt for Fabrication on Skywater Open PDK Shuttle appeared first on Linux.com.

]]>

The OpenPOWER based Microwatt cpu core has been selected to be included in the Efabless Open MPW Shuttle Program. Microwatt’s inclusion in the program represents a lower barrier to entry for chip manufacturing. It also demonstrates the ability to create fully designed, fabricated chips relying on a complete, end-to-end open source environment – including open governance, specifications, tooling, IP, hardware, software, and manufacturing.

Read more at OpenPOWER Foundation

The post OpenPOWER Foundation Provides Microwatt for Fabrication on Skywater Open PDK Shuttle appeared first on Linux.com.

]]>The post The TARS Foundation Celebrates its First Anniversary appeared first on Linux.com.

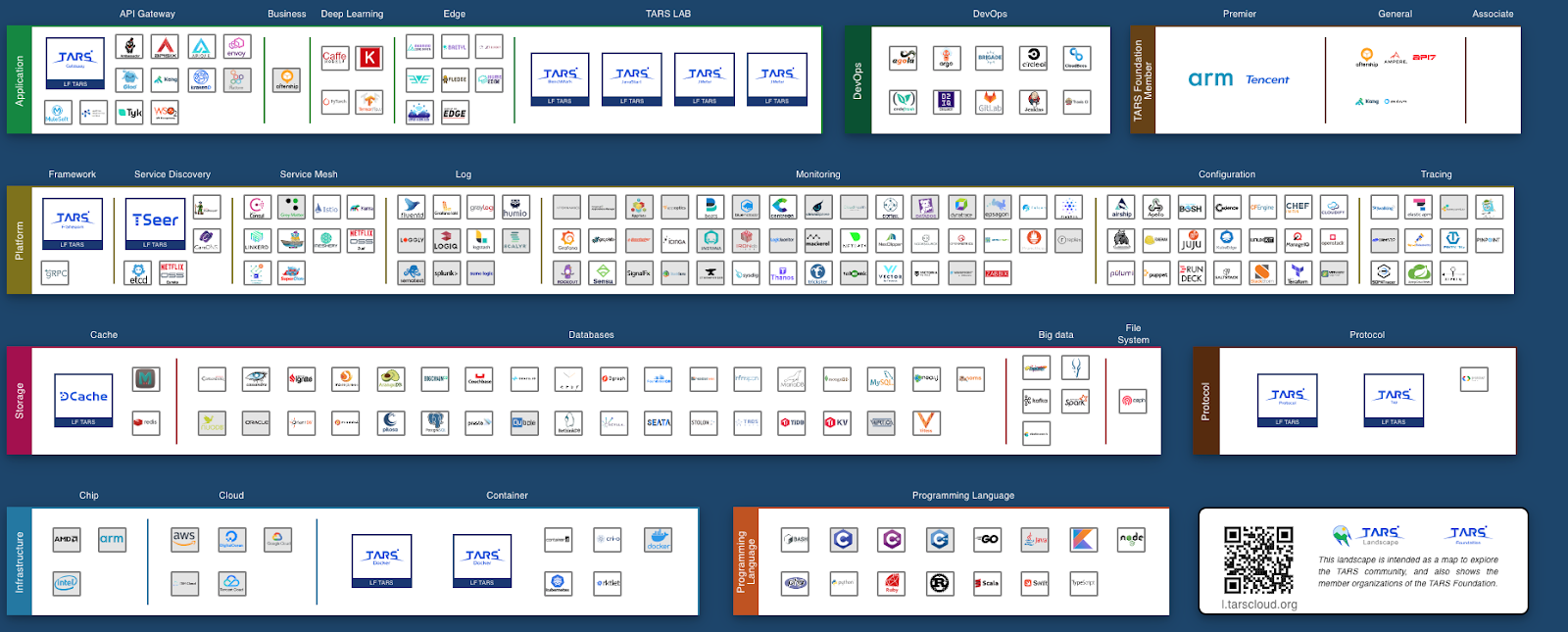

]]>This year, four new projects have joined the TARS Foundation, expanding our technical community. The TARS Foundation launched TARS Landscape in July 2020, presenting an ideal and complete microservice ecosystem, which is the vision that the TARS open source community works to achieve. Furthermore, we welcome more open source projects to join the TARS community and go through our incubation process.

In September 2020, The Linux Foundation and TARS Foundation released a new, free training course, Building Microservice Platforms with TARS, on the edX platform. This course is designed for engineers working in microservices and enterprise managers interested in exploring internal technical architectures working for digital transmission in traditional industries. The course explains the functions, characteristics, and structure of the TARS microservices framework while demonstrating how to deploy and maintain services in different programming languages in the TARS Framework. Besides, anyone interested in software architecture will benefit from this course.

If you are interested in TARS training resources, please check out Building Microservice Platforms with TARS on edX.

Thanking our Members and Contributors

For more updates from TARS Foundation, please read our Annual Report 2020.

We would like to thank all our projects and project contributors. Thank you for your trust in the TARS Foundation. Without you and the value you bring to our entire community, our foundation would not exist.

We also want to thank our Governing Board, Technical Oversight Committee, Outreach Committee, and Community Advisor members! Every member has demonstrated their dedication and tireless efforts to ensure that the TARS Foundation is building a complete governance structure to push out a more comprehensive range of programs and make real progress. With the guidance of these passionate and wise leaders from our governing bodies, TARS Foundation is confident to become a neutral home for additional projects that solve critical problems surrounding microservices.

Thank you to all our members, Arm, Tencent, AfterShip, Ampere, API7, Kong, Zenlayer, and Nanjing University, for investing in the future of open source microservices. The TARS Foundation welcomes more companies and organizations to join our mission by becoming members.

Thank you to our end users! The TARS Foundation End User Community Plan was released to allow more companies to get involved with the TARS community. The purpose of the plan is to enable an open and free platform for communication and discussion about microservices technology and collaboration opportunities. Currently, the TARS Foundation has eight end-user companies, and we welcome more companies to join us as End Users.

What is next?

The TARS Foundation will continue to add more members and end-user companies in the next year while growing our shared resource pool for the benefit of our community. We will also look to include and incubate more projects, aiding our open source microservices ecosystem to empower any industry to turn ideas into applications at scale quickly. As part of our plan for next year, we aim to hold recurring meetup events worldwide and large-scale summits, creating a space for global developers to learn and exchange their ideas about microservices.

Words from our partners

Kevin Ryan, Senior Director, Arm

Through our collaboration with the TARS Foundation and Tencent, we’ve leveraged a significant opportunity to build and develop the microservices ecosystem,” said Kevin Ryan, senior director of Ecosystem, Automotive and IoT Line of Business, Arm. “We look forward to future growth across the TARS community as contributions, members, and momentum continue to accelerate.”

Mark Shan, Open Source Alliance Chair, Tencent

As TARS Foundation turns one year old, Tencent will continue to collaborate with partners and build an open and free microservices ecosystem in open source. By consistently upgrading microservices technology and cultivating the TARS community, we look forward to creating more innovations and making social progress through technology.

Teddy Chan, CEO & Co-Founder, AfterShip

Best wishes to the TARS Foundation for turning one year old and continuing its positive influence on microservices. AfterShip will fully support the future development of the Foundation!

Mauri Whalen, VP of Software Engineering, Ampere

Ampere has been partnering with the TARS Foundation to drive innovation for microservices. Ampere understands the importance of this technology and is committed to providing Ampere/Arm64 Platform support and a performance testing framework for building the open microservices community. We are excited the TARS Foundation has reached its first birthday milestone. Their project is driving needed innovation for modern cloud workloads.

Ming Wen, Co-founder, API7

Congratulations to the first anniversary of the TARS Foundation! With the wave of enterprise digital transformation, microservices have become the infrastructure for connecting critical traffic. The TARS Foundation has gathered several well-known open source projects related to microservices, including the APISIX-based open source microservice gateway provided by api7.ai. We believe that under the TARS Foundation’s efforts, microservices and the TARS Foundation will play an increasingly important role in digital transformation.

Marco Palladino, CTO and Co-Founder, Kong

In this new era driven by digital transformation 2.0, organizations around the world are transforming their applications to microservices to grow their customer base faster, enter new markets, and ship products faster. None of this would be possible without agile, distributed, and decoupled architectures that drive innovation, efficiency, and reliability in our digital strategy: in one word, microservices. Kong supports the TARS foundation to accelerate microservices adoption in both open source ecosystems and enterprise landscape, and to provide a modern connectivity fabric for all our services, across every cloud and platform.”, Marco Palladino, CTO and Co-Founder at Kong.

Jim Xu, Principal Engineer & Architect, Zenlayer

Microservices are the next big thing in the cloud as they enable fast development, scaling, and time-to-market of enterprise applications. TARS Foundation leads in building a strong ecosystem for open-source microservices, from the edge to the cloud. As a leading-edge cloud service provider, Zenlayer is committed to enabling microservices in multi-cloud and hybrid cloud scenarios in collaboration with the TARS Foundation community. As the TARS Foundation enters its second year, Zenlayer will continue to innovate in infrastructure, platforms, and labs to empower microservice implementation for enterprises of all kinds.

He Zhang, Professor, Nanjing University

We fully support the development of microservices and the mission to co-build a Cloud-native ecosystem. Embracing open source and community contribution, we believe the TARS Foundation is creating a future with endless possibilities ahead.

About the TARS Foundation

The TARS Foundation is a nonprofit, open source microservice foundation under the Linux Foundation umbrella to support the rapid growth of contributions and membership for a community focused on building an open microservices platform. It focuses on open source technology that helps businesses to embrace the microservices architecture as they innovate into new areas and scale their applications. For more information, please visit tarscloud.org.

The post The TARS Foundation Celebrates its First Anniversary appeared first on Linux.com.

]]>The post Unikraft: Pushing Unikernels into the Mainstream appeared first on Linux.com.

]]>Unikernels have been around for many years and are famous for providing excellent performance in boot times, throughput, and memory consumption, to name a few metrics [1]. Despite their apparent potential, unikernels have not yet seen a broad level of deployment due to three main drawbacks:

- Hard to build: Putting a unikernel image together typically requires expert, manual work that needs redoing for each application. Also, many unikernel projects are not, and don’t aim to be, POSIX compliant, and so significant porting effort is required to have standard applications and frameworks run on them.

- Hard to extract high performance: Unikernel projects don’t typically expose high-performance APIs; extracting high performance often requires expert knowledge and modifications to the code.

- Little or no tool ecosystem: Assuming you have an image to run, deploying it and managing it is often a manual operation. There is little integration with major DevOps or orchestration frameworks.

While not all unikernel projects suffer from all of these issues (e.g., some provide some level of POSIX compliance but the performance is lacking, others target a single programming language and so are relatively easy to build but their applicability is limited), we argue that no single project has been able to successfully address all of them, hindering any significant level of deployment. For the past three years, Unikraft (www.unikraft.org), a Linux Foundation project under the Xen Project’s auspices, has had the explicit aim to change this state of affairs to bring unikernels into the mainstream.

If you’re interested, read on, and please be sure to check out:

- The replay of our two FOSDEM talks [2,3] and the virtual stand

- Our website (unikraft.org) and source code (https://github.com/unikraft).

- Our upcoming source code release, 0.5 Tethys (more information at http://www.unikraft.org/release/)

- unikraft.io, for industrial partners interested in Unikraft PoCs (or info@unikraft.io)

High Performance

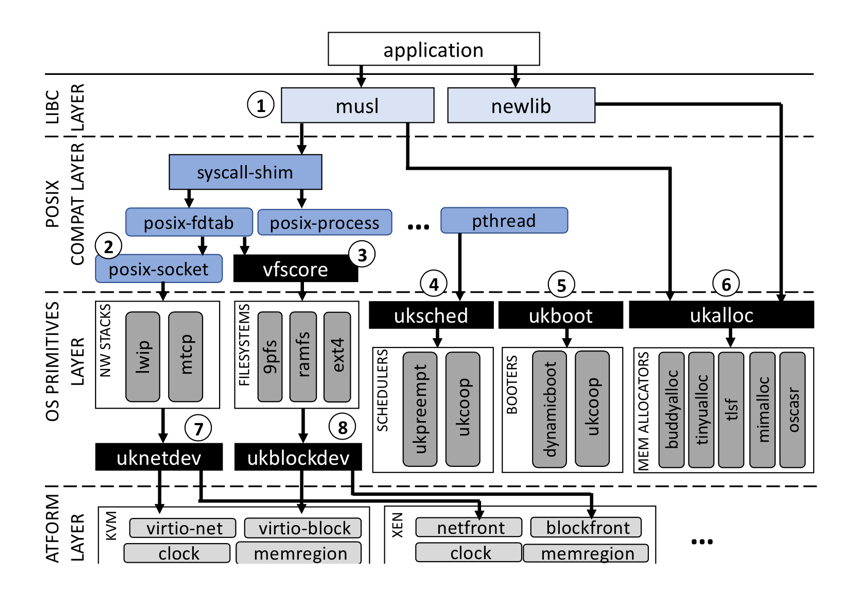

To provide developers with the ability to obtain high performance easily, Unikraft exposes a set of composable, performance-oriented APIs. The figure below shows Unikraft’s architecture: all components are libraries with their own Makefile and Kconfig configuration files, and so can be added to the unikernel build independently of each other.

Figure 1. Unikraft ‘s fully modular architecture showing high-performance APIs

APIs are also micro-libraries that can be easily enabled or disabled via a Kconfig menu; Unikraft unikernels can compose which APIs to choose to best cater to an application’s needs. For example, an RCP-style application might turn off the uksched API (➃ in the figure) to implement a high performance, run-to-completion event loop; similarly, an application developer can easily select an appropriate memory allocator (➅) to obtain maximum performance, or to use multiple different ones within the same unikernel (e.g., a simple, fast memory allocator for the boot code, and a standard one for the application itself).

|

|

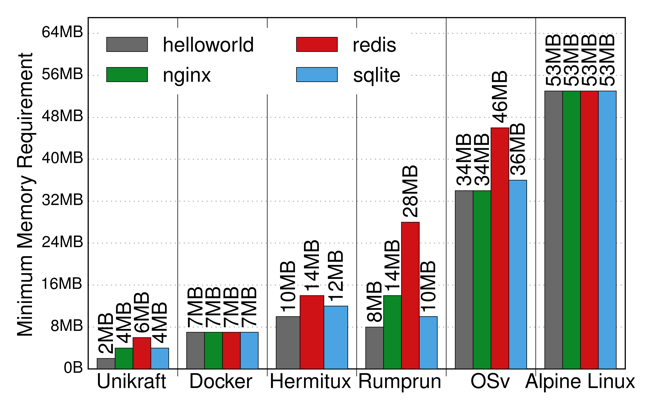

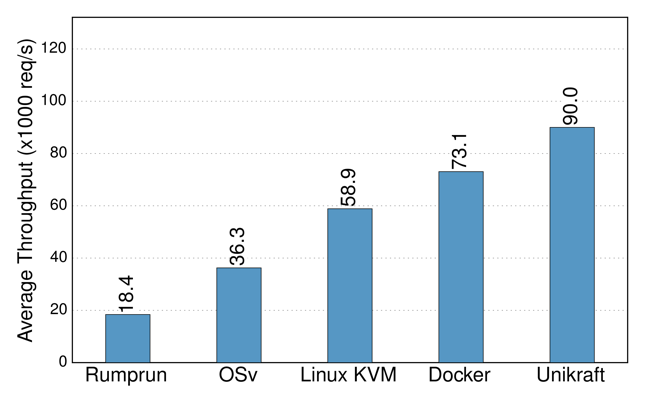

| Figure 2. Unikraft memory consumption vs. other unikernel projects and Linux | Figure 3. Unikraft NGINX throughput versus other unikernels, Docker, and Linux/KVM. |

These APIs, coupled with the fact that all Unikraft’s components are fully modular, results in high performance. Figure 2, for instance, shows Unikraft having lower memory consumption than other unikernel projects (HermiTux, Rump, OSv) and Linux (Alpine); and Figure 3 shows that Unikraft outperforms them in terms of NGINX requests per second, reaching 90K on a single CPU core.

Further, we are working on (1) a performance profiler tool to be able to quickly identify potential bottlenecks in Unikraft images and (2) a performance test tool that can automatically run a large set of performance experiments, varying different configuration options to figure out optimal configurations.

Ease of Use, No Porting Required

Forcing users to port applications to a unikernel to obtain high performance is a showstopper. Arguably, a system is only as good as the applications (or programming languages, frameworks, etc.) can run. Unikraft aims to achieve good POSIX compatibility; one way of doing so is supporting a libc (e.g., musl), along with a large set of Linux syscalls.

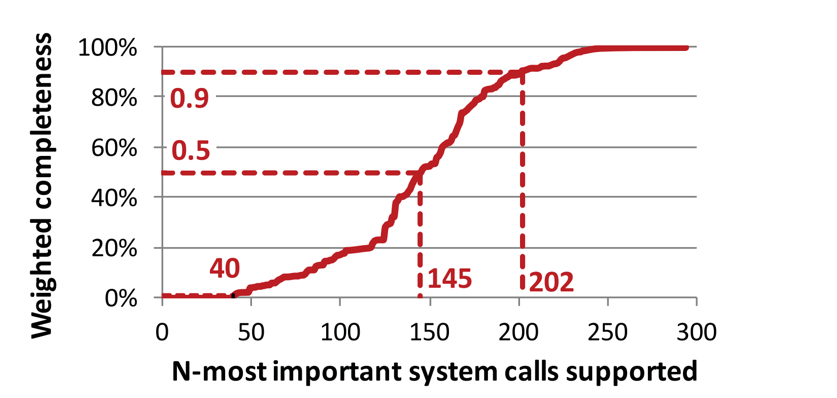

Figure 4. Only a certain percentage of syscalls are needed to support a wide range of applications

While there are over 300 of these, many of them are not needed to run a large set of applications; as shown in Figure 1 (taken from [5]). Having in the range of 145, for instance, is enough to support 50% of all libraries and applications in a Ubuntu distribution (many of which are irrelevant to unikernels, such as desktop applications). As of this writing, Unikraft supports over 130 syscalls and a number of mainstream applications (e.g., SQLite, Nginx, Redis), programming languages and runtime environments such as C/C++, Go, Python, Ruby, Web Assembly, and Lua, not to mention several different hypervisors (KVM, Xen, and Solo5) and ARM64 bare-metal support.

Ecosystem and DevOps

Another apparent downside of unikernel projects is the almost total lack of integration with existing, major DevOps and orchestration frameworks. Working towards the goal of integration, in the past year, we created the kraft tool, allowing users to choose an application and a target platform simply (e.g., KVM on x86_64) and take care of building the image running it.

Beyond this, we have several sub-projects ongoing to support in the coming months:

- Kubernetes: If you’re already using Kubernetes in your deployments, this work will allow you to deploy much leaner, fast Unikraft images transparently.

- Cloud Foundry: Similarly, users relying on Cloud Foundry will be able to generate Unikraft images through it, once again transparently.

- Prometheus: Unikernels are also notorious for having very primitive or no means for monitoring running instances. Unikraft is targeting Prometheus support to provide a wide range of monitoring capabilities.

In all, we believe Unikraft is getting closer to bridging the gap between unikernel promise and actual deployment. We are very excited about this year’s upcoming features and developments, so please feel free to drop us a line if you have any comments, questions, or suggestions at info@unikraft.io.

About the author: Dr. Felipe Huici is Chief Researcher, Systems and Machine Learning Group, NEC Laboratories Europe GmbH

References

[1] Unikernels Rethinking Cloud Infrastructure. http://unikernel.org/

[2] Is the Time Ripe for Unikernels to Become Mainstream with Unikraft? FOSDEM 2021 Microkernel developer room. https://fosdem.org/2021/schedule/event/microkernel_unikraft/

[3] Severely Debloating Cloud Images with Unikraft. FOSDEM 2021 Virtualization and IaaS developer room. https://fosdem.org/2021/schedule/event/vai_cloud_images_unikraft/

[4] Welcome to the Unikraft Stand! https://stands.fosdem.org/stands/unikraft/

[5] A study of modern Linux API usage and compatibility: what to support when you’re supporting. Eurosys 2016. https://dl.acm.org/doi/10.1145/2901318.2901341

The post Unikraft: Pushing Unikernels into the Mainstream appeared first on Linux.com.

]]>The post SIOS Offers SAP Certified High Availability And Disaster Recovery For SAP S/4HANA Environments In The Cloud appeared first on Linux.com.

]]>The post SIOS Offers SAP Certified High Availability And Disaster Recovery For SAP S/4HANA Environments In The Cloud appeared first on Linux.com.

]]>The post Deep Learning 101 — Role of Deep Learning in Artificial Intelligence appeared first on Linux.com.

]]>- Artificial intelligence (AI) is an area of computer science that emphasizes the creation of intelligent machines that work and react like human.

- Machine learning (ML) is an approach to achieve Artificial Intelligence. ML approach provides computers with the ability to learn without being explicitly programmed. At its most basic is the practice of using algorithms to parse data, learn from it, and then make prediction about something in the world.

- Deep learning (DL) is a technique for implementing Machine Learning. DL is the application of artificial neural networks to learning tasks that contain more than one hidden layer.

- Artificial neural networks are computing systems inspired by the biological neural networks that constitute animal brains. An artificial neural network is an interconnected group of nodes, akin to the vast network of neurons in a brain. Here, each circular node represents an artificial neuron and an arrow represents a connection from the output of one neuron to the input of another.

Read more at Medium

The post Deep Learning 101 — Role of Deep Learning in Artificial Intelligence appeared first on Linux.com.

]]>The post Assessing Progress in Automation Technologies appeared first on Linux.com.

]]>

Companies cite “lack of data” and “lack of skilled people” as the main factors holding back adoption. In many instances, “lack of data” is literally the state of affairs: companies have yet to collect and store the data needed to train the ML models they desire. The “skills gap” is real and persistent.

Read more at O’Reilly

The post Assessing Progress in Automation Technologies appeared first on Linux.com.

]]>