The post Download the 2021 Linux Foundation Annual Report appeared first on Linux.com.

]]>

In 2021, The Linux Foundation continued to see organizations embrace open collaboration and open source principles, accelerating new innovations, approaches, and best practices. As a community, we made significant progress in the areas of cloud-native computing, 5G networking, software supply chain security, 3D gaming, and a host of new industry and social initiatives.

Download and read the report today.

The post Download the 2021 Linux Foundation Annual Report appeared first on Linux.com.

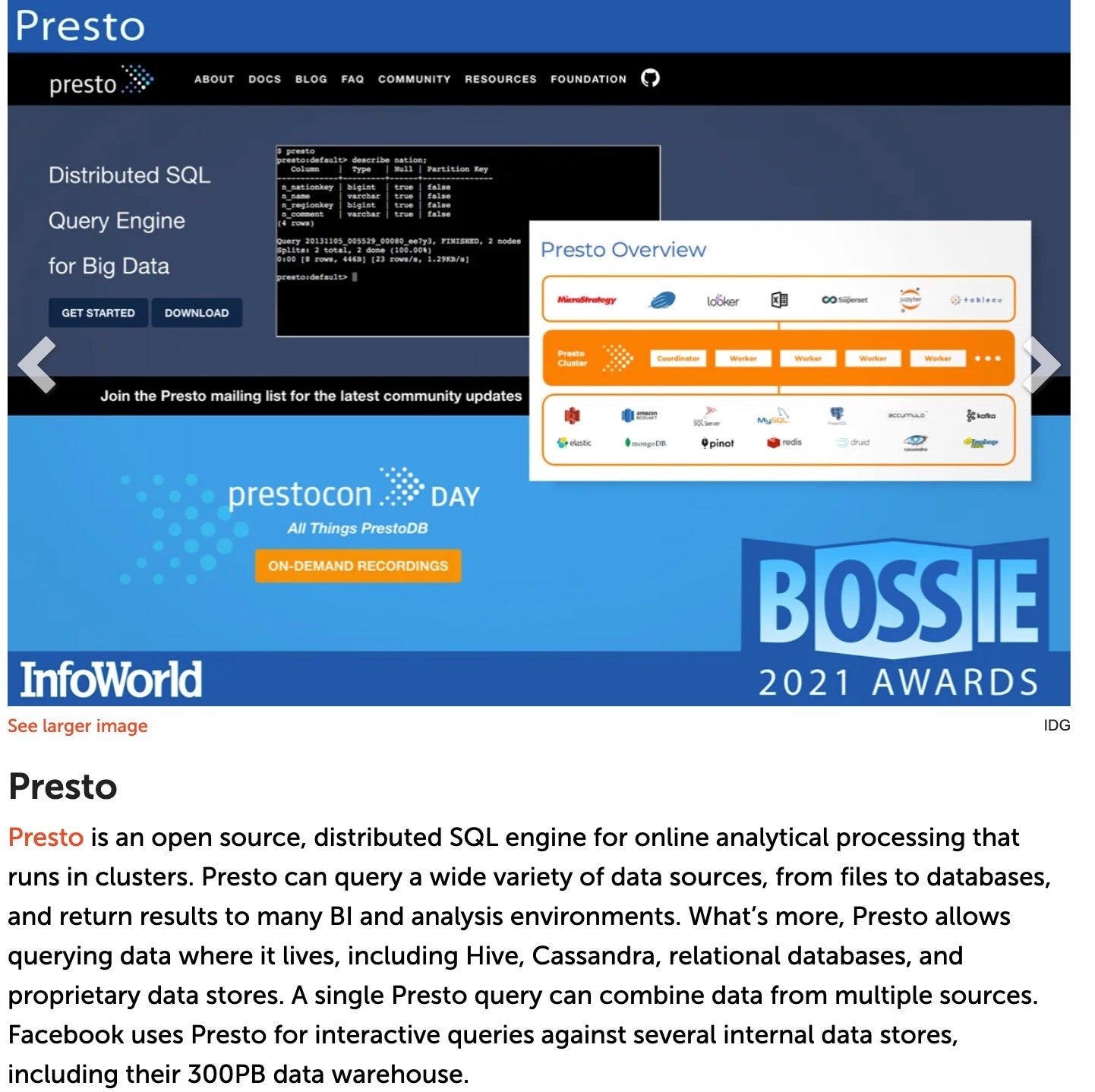

]]>The post PrestoDB is Recognized as Best Open Source Software of 2021 Bossie Awards (InfoWorld) appeared first on Linux.com.

]]>Read more about the 2021 Bossie Awards at InfoWorld.

The post PrestoDB is Recognized as Best Open Source Software of 2021 Bossie Awards (InfoWorld) appeared first on Linux.com.

]]>The post Measuring the Health of Open Source Communities appeared first on Linux.com.

]]>If you manage or want to be part of an open source project, you might have wondered if the project is healthy or not and how to measure key performance indicators relating to project health.

You could choose to analyze different aspects of the project, such as the technical health (such as number of forks on GitHub, number of contributors over time, and number of bugs reported over time), the financial health (such as the donations and revenues over time), the social aspects (such as social media mentions, post shares, and sentiment analysis across social media channels), and diversity and inclusion aspects (such as having a code of conduct, create event inclusion activities, color-blind-accessible materials in presentations, and project front-end designs).

The question is, how do you measure such aspects? To determine if a project’s overall health, metrics should be computed and analyzed over time. It’s helpful to have such metrics in a dashboard to facilitate analysis and decision-making.

Why do metrics matter?

“The goal here is not to construct an enormous vacuum cleaner to suck every tiny detail of your community into a graph. The goal is instead to identify what we don’t know about our community and to use measurements as a means to understand those things better.”

The Art of Community – Jono Bacon

Open source software needs community. By knowing more about the community through different metrics, stakeholders can make informed decisions. For example, developers can select the best project to join, maintainers can decide which governance measures are effective, end-users can select the healthier project that will live longer (and prosper), and investors can select the best project to invest in [1].

Furthermore, Open Source Program Offices (OSPO), i.e., offices inside companies that aim to manage the open source ecosystems that the company depends on [5], can assess the project’s health and sustainability by analyzing different metrics. OSPO is becoming very popular because around 90% of the components of modern applications are open source [6]. Thus, measuring the risks of consuming, contributing to, and releasing open source software is very important to OSPO [5].

How do we define which metrics to evaluate?

- Set your goals: Measuring without a goal is just pointless. Goals are concrete targets to know what the community wants to achieve [3].

- Find reliable statistical sources: After defining your goals, you can then identify the source to help you achieve your goals. It is essential to find ways to get statistics on the most important goals [4]. Some statistics are apparent, such as on GitHub, you can collect the number of stars, number of forks, and number of contributors to a repository. It is also possible to get mailing lists subscribers and the project website visits. Some statistics are not so obvious, though, and you might need tools to help extract such numbers.

- Interpret the statistics: Interpret the statistics regarding the “4 P’s”: People, Project, Process, and Partners [4].

-

- Look at the numbers mostly related to the People in the community, such as contributors’ productivity, which channels have the most impact, etc.

-

- Then, look at the velocity and maturity of your Project, such as the number of PRs, and the number of issues.

-

- After that, look at the maturity of your Process, i.e., what’s your review process? How long does it take to solve an issue?

-

- Finally, look at the ecosystem view regarding your Partners — that is, statistics on project dependencies and projects that depend on you.

- Use dashboards to evaluate your metrics: Many existing tools help to create dashboards to analyze and measure open source community healthiness, such as LFX Insights, Bitergia, and GrimoireLab.

- Make changes: After measuring, it is necessary to make changes based on those measurements.

Learning from examples

Different projects use different strategies to measure the project’s health.

The CHAOSS Community creates analytics and metrics to help understand project health. They have many working groups, each one focusing on a specific kind of metric. For example,

- The Diversity and Inclusion working group focuses on the diversity and inclusion in events, how diverse and inclusive the governance of a community is, and how healthy the community leadership is.

- The Evolution working group creates metrics for analyzing the type and frequency of activities involved in software development, improving the project quality, and community growth.

- The Value working group creates metrics for identifying the degree to which a project improves people’s lives beyond the software project, the degree to which the project is valuable to a user or contributor, and the degree to which the project is monetarily valuable from an organization point of view.

- The Risk working group creates metrics to understand the quality of a specific software package, potential intellectual property issues, and understand how transparent a given software package is concerning licenses, dependencies, etc.

The Mozilla project collaborated with Bitergia and Analyse & Tal to build an interactive network visualization of Mozilla’s contributor communities. By visualizing different metrics, they were able to find that Mozilla has not only one community but many communities concerning other areas of contributions, motivations, engagement levels, etc. Based on that, they built a report to visualize how these different communities are interconnected.

LFX Insights

Many projects such as Kubernetes and TARS use the LFX Insights tool to analyze their community.

The LFX Insights dashboard helps project communities evaluate different metrics concerning open source development to grow a sustainable open source ecosystem. The tool has distinct features to support various stakeholders [2], such as

- Maintainers and project leads can get a multi-dimensional reporting of the project, avoid maintainer burnout, ensure the project’s health, security, and sustainability.

- Project marketers and community evangelists can use the metrics to attract new members, engage the community, and identify opportunities to increase awareness.

- Members and corporate sponsors can know which community and software to engage in, communicate the impact within the community, and evaluate their employees’ open source contributions.

- Open source developers can know where to focus their efforts, showcase their leadership and expertise, manage affiliations and their impact.

The source code repository includes the number of commits in total and by contributor, the number of contributors, the top contributors by commits, and the companies that mainly contribute to the project. Users can extract Pull requests (PRs) from many tools such as Gerrit and GitHub. Furthermore, users, maintainers, and contributors to Linux Foundation projects, such as TARS, can extract various metrics from LFX Insights.

Similarly to commits, the number of PRs in total, by contributor, and by company. The tool also calculates the average time to review the PR and the PRs that are still to be merged. You can also extract metrics for issues and continuous integration tools. Besides that, LFX Insights allows projects to collect communication and collaboration information from different communication channels such as mailing lists, Slack, and Twitter.

Projects might have different goals when using LFX Insights. The TARS project, part of the TARS Foundation, uses the LFX Insights tool to have a big picture of each sub-project (such as TARSFramework, TARSGo, etc.). Through the dashboards created by the LFX Insights tool, the TARS community can know the statistics of each project and the community as a whole (see Figure 1 and 2).

Using LFX Insights tools, the TARS community analyzes how many people contribute to each project and which organizations contribute to TARS. Additionally, they extract the number of commits and lines of code contributed by each contributor. The TARS community believes that by analyzing such metrics, they can attract and retain more contributors.

About the authors:

Isabella Ferreira is an Ambassador at the TARS Foundation, a cloud-native open-source microservice foundation under the Linux Foundation.

Mark Shan is the Chair at Tencent Open Source Alliance and also Board Chair of the TARS Foundation Governing Board.

REFERENCES

[1] Jansen, Slinger. “Measuring the health of open source software ecosystems: Beyond the scope of project health.” Information and Software Technology 56.11 (2014): 1508-1519.

[2] https://www.youtube.com/watch?v=hwTOrDg3LsI

[3] https://opensource.com/bus/16/8/measuring-community-health

[4] https://dzone.com/articles/-measuring-metrics-in-open-source-projects

[5] https://opensource.com/article/20/5/open-source-program-office

[6] https://fossa.com/blog/building-open-source-program-office-ospo/

The post Measuring the Health of Open Source Communities appeared first on Linux.com.

]]>The post Chris Aniszczyk Talks About The Open 3D Foundation appeared first on Linux.com.

]]>The post Chris Aniszczyk Talks About The Open 3D Foundation appeared first on Linux.com.

]]>The post OpenAPI Specification 3.1.0 Available Now appeared first on Linux.com.

]]>

Introduction

The OpenAPI Initiative (OAI), a consortium of forward-looking industry experts who focus on standardizing how APIs are categorized and described, released the OpenAPI Specification 3.1.0 in February. This new version introduces better support for webhooks and adds 100% compatibility with the latest draft (2020-12) of JSON Schema.

Complete information on the OpenAPI Specification (OAS) is available for immediate access here: https://spec.openapis.org/oas/v3.1.0 It includes Definitions, Specifications including Schema Objects, and much more.

Also, the OAI sponsored the creation of new documentation to make it easier to understand the structure of the specification and its benefits. It is available here: https://oai.github.io/Documentation. It is intended for HTTP-based API designers and writers wishing to benefit from having their API formalized in an OpenAPI Description document.

What is the OpenAPI Specification?

The OAS is the industry standard for describing modern APIs. It defines a standard, and programming language-agnostic interface description for HTTP APIs, which allows both humans and computers to discover and understand the capabilities of a service without requiring access to source code, additional documentation, or inspection of network traffic.

The OAS is used by organizations worldwide, including Atlassian, Bloomberg, eBay, Google, IBM, Microsoft, Oracle, Postman, SAP, SmartBear, Vonage, and many more.

JSON Schema Now Supported

The Schema Object defines everything inside the `schema` keyword in OpenAPI. Previously, this was loosely based on JSON Schema and referred to as a “subset superset” because it added some things and removed other things from JSON Schema. The OpenAPI and JSON Schema communities worked together to align the specifications to align tooling and approach.

Supporting modern JSON Schema is a significant step forward.

“The mismatch between OpenAPI JSON Schema-like structures and JSON Schema itself has long been a problem for users and implementers. Full alignment of OpenAPI 3.1.0 with JSON Schema draft 2020-12 will not only save users much pain but also ushers in a new standardized approach to schema extensions,” said Ben Hutton, JSON Schema project lead.

“We’ve spent the last few years (and release) making sure we can clearly hear and understand issues the community faces. With our time-limited volunteer-based effort, not only have we fixed many pain points and added new features, but JSON Schema vocabularies allows for standards to be defined which cater for use cases beyond validation, such as the generation of code, UI, and documentation.”

Major Changes in OpenAPI Specification 3.1.0

- JSON Schema vocabularies alignment

- New top-level element for describing Webhooks that are registered and managed out of band

- Support for identifying API licenses using the standard SPDX identifier

- PathItems object is now optional to make it simpler to create reusable libraries of components. Reusable PathItems can be described in the components object. There is also support for describing APIs secured using client certificates.

Full OAS 3.1.0 details are available here: https://spec.openapis.org/oas/v3.1.0 The new documentation is available here: https://oai.github.io/Documentation/

Get Involved in the OAI

To learn more about participating in the evolution of the OAS: https://www.openapis.org/participate/how-to-contribute

- Become a Member

- OpenAPI Specification Twitter

- OpenAPI Specification GitHub – Get started immediately!

- Share your OpenAPI Spec v3 Implementations

Conclusion

In order to achieve the impressive goal of full compatibility with modern JSON Schema, the OAS underwent important updates that make things a lot easier for tooling maintainers, as they no longer need to try and guess what draft a schema is by looking at where it is referenced from. Just this makes implementation of OAS 3.1.0 important to evaluate and strongly consider.

The post OpenAPI Specification 3.1.0 Available Now appeared first on Linux.com.

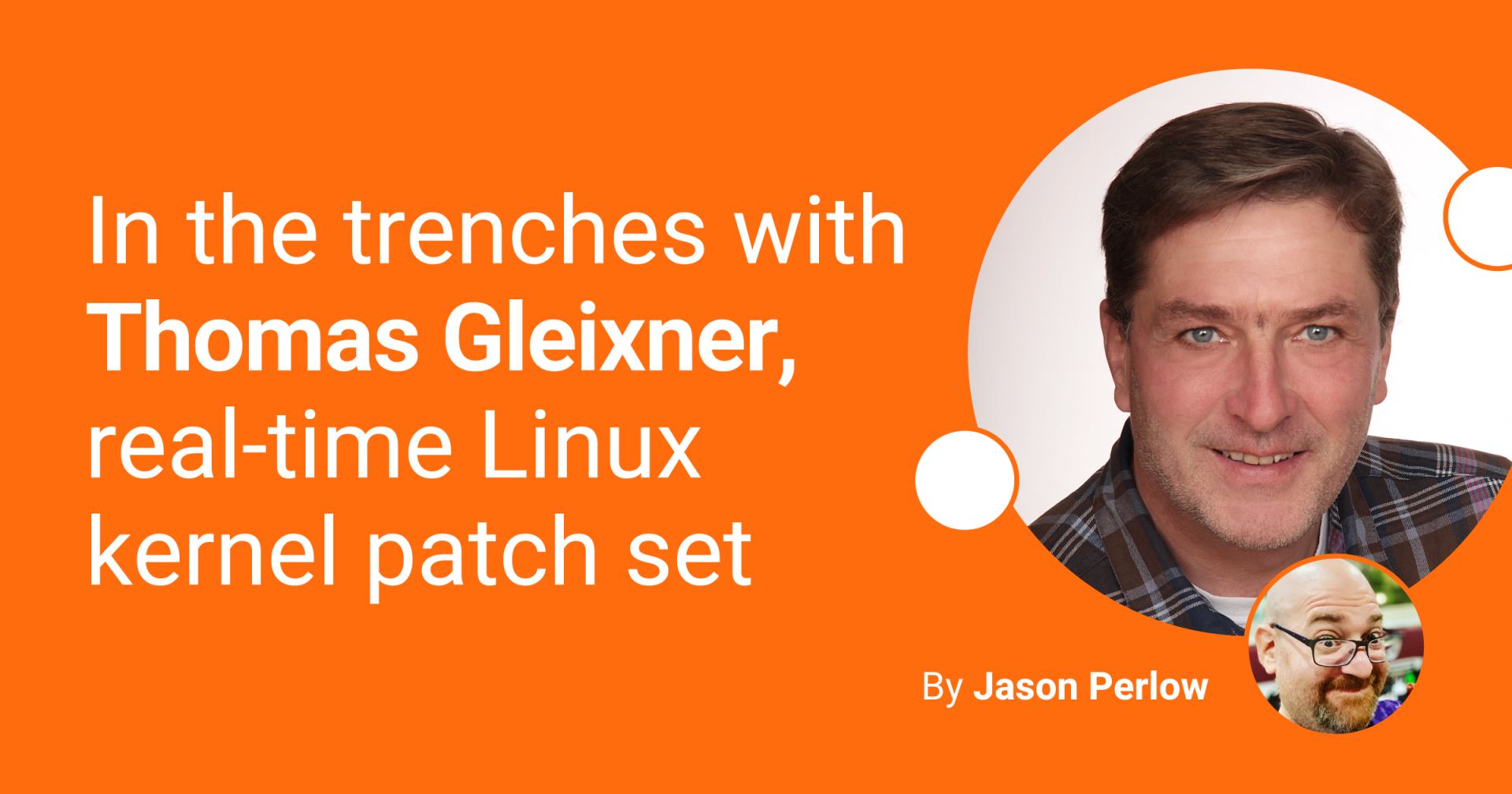

]]>The post In the trenches with Thomas Gleixner, real-time Linux kernel patch set appeared first on Linux.com.

]]>

Jason Perlow, Editorial Director at the Linux Foundation interviews Thomas Gleixner, Linux Foundation Fellow, CTO of Linutronix GmbH, and project leader of the PREEMPT_RT real-time kernel patch set.

JP: Greetings, Thomas! It’s great to have you here this morning — although for you, it’s getting late in the afternoon in Germany. So PREEMPT_RT, the real-time patch set for the kernel is a fascinating project because it has some very important use-cases that most people who use Linux-based systems may not be aware of. First of all, can you tell me what “Real-Time” truly means?

TG: Real-Time in the context of operating systems means that the operating system provides mechanisms to guarantee that the associated real-time task processes an event within a specified period of time. Real-Time is often confused with “really fast.” The late Prof. Doug Niehaus explained it this way: “Real-Time is not as fast as possible; it is as fast as specified.”

The specified time constraint is application-dependent. A control loop for a water treatment plant can have comparatively large time constraints measured in seconds or even minutes, while a robotics control loop has time constraints in the range of microseconds. But for both scenarios missing the deadline at which the computation has to be finished can result in malfunction. For some application scenarios, missing the deadline can have fatal consequences.

In the strict sense of Real-Time, the guarantee which is provided by the operating system must be verifiable, e.g., by mathematical proof of the worst-case execution time. In some application areas, especially those related to functional safety (aerospace, medical, automation, automotive, just to name a few), this is a mandatory requirement. But for other scenarios or scenarios where there is a separate mechanism for providing the safety requirements, the proof of correctness can be more relaxed. But even in the more relaxed case, the malfunction of a real-time system can cause substantial damage, which obviously wants to be avoided.

JP: What is the history behind the project? How did it get started?

TG: Real-Time Linux has a history that goes way beyond the actual PREEMPT_RT project.

Linux became a research vehicle very early on. Real-Time researchers set out to transform Linux into a Real-Time Operating system and followed different approaches with more or less success. Still, none of them seriously attempted a fully integrated and perhaps upstream-able variant. In 2004 various parties started an uncoordinated effort to get some key technologies into the Linux kernel on which they wanted to build proper Real-Time support. None of them was complete, and there was a lack of an overall concept.

Ingo Molnar, working for RedHat, started to pick up pieces, reshape them and collect them in a patch series to build the grounds for the real-time preemption patch set PREEMPT_RT. At that time, I worked with the late Dr. Doug Niehaus to port a solution we had working based on the 2.4 Linux kernel forward to the 2.6 kernel. Our work was both conflicting and complimentary, so I teamed up with Ingo quickly to get this into a usable shape. Others like Steven Rostedt brought in ideas and experience from other Linux Real-Time research efforts. With a quickly forming loose team of interested developers, we were able to develop a halfway usable Real-Time solution that was fully integrated into the Linux kernel in a short period of time. That was far from a maintainable and production-ready solution. Still, we had laid the groundwork and proven that the concept of making the Linux Kernel real-time capable was feasible. The idea and intent of fully integrating this into the mainline Linux kernel over time were there from the very beginning.

JP: Why is it still a separate project from the Mainline kernel today?

TG: To integrate the real-time patches into the Linux kernel, a lot of preparatory work, restructuring, and consolidation of the mainline codebase had to be done first. While many pieces that emerged from the real-time work found their way into the mainline kernel rather quickly due to their isolation, the more intrusive changes that change the Linux kernel’s fundamental behavior needed (and still need) a lot of polishing and careful integration work.

Naturally, this has to be coordinated with all the other ongoing efforts to adopt the Linux kernel to the different use cases ranging from tiny embedded systems to supercomputers.

This also requires carefully designing the integration so it does not get in the way of other interests and imposes roadblocks for further developing the Linux kernel, which is something the community and especially Linus Torvalds, cares about deeply.

As long as these remaining patches are out of the mainline kernel, this is not a problem because it does not put any burden or restriction on the mainline kernel. The responsibility is on the real-time project, but on the other side, in this context, there is no restriction to take shortcuts that would never be acceptable in the upstream kernel.

The real-time patches are fundamentally different from something like a device driver that sits at some corner of the source tree. A device driver does not cause any larger damage when it goes unmaintained and can be easily removed when it reaches the final state bit-rot. Conversely, the PREEMPT_RT core technology is in the heart of the Linux kernel. Long-term maintainability is key as any problem in that area will affect the Linux user universe as a whole. In contrast, a bit-rotted driver only affects the few people who have a device depending on it.

JP: Traditionally, when I think about RTOS, I think of legacy solutions based on closed systems. Why is it essential we have an open-source alternative to them?

TG: The RTOS landscape is broad and, in many cases, very specialized. As I mentioned on the question of “what is real-time,” certain application scenarios require a fully validated RTOS, usually according to an application space-specific standard and often regulatory law. Aside from that, many RTOSes are limited to a specific class of CPU devices that fit into the targeted application space. Many of them come with specialized application programming interfaces which require special tooling and expertise.

The Real-Time Linux project never aimed at these narrow and specialized application spaces. It always was meant to be the solution for 99% of the use cases and to be able to fully leverage the flexibility and scalability of the Linux kernel and the broader FOSS ecosystem so that integrated solutions with mixed-criticality workloads can be handled consistently.

Developing real-time applications on a real-time enabled Linux kernel is not much different from developing non-real-time applications on Linux, except for the careful selection of system interfaces that can be utilized and programming patterns that should be avoided, but that is true for real-time application programming in general independent of the RTOS.

The important difference is that the tools and concepts are all the same, and integration into and utilizing the larger FOSS ecosystem comes for free.

The downside of PREEMPT_RT is that it can’t be fully validated, which excludes it from specific application spaces, but there are efforts underway, e.g., the LF ELISA project, to fill that gap. The reason behind this is, that large multiprocessor systems have become a commodity, and the need for more complex real-time systems in various application spaces, e.g., assisted / autonomous driving or robotics, requires a more flexible and scalable RTOS approach than what most of the specialized and validated RTOSes can provide.

That’s a long way down the road. Still, there are solutions out there today which utilize external mechanisms to achieve the safety requirements in some of the application spaces while leveraging the full potential of a real-time enabled Linux kernel along with the broad offerings of the wider FOSS ecosystem.

JP: What are examples of products and systems that use the real-time patch set that people depend on regularly?

TG: It’s all over the place now. Industrial automation, control systems, robotics, medical devices, professional audio, automotive, rockets, and telecommunication, just to name a few prominent areas.

JP: Who are the major participants currently developing systems and toolsets with the real-time Linux kernel patch set?

TG: Listing them all would be equivalent to reciting the “who’s who” in the industry. On the distribution side, there are offerings from, e.g., RedHat, SUSE, Mentor, and Wind River, which deliver RT to a broad range of customers in different application areas. There are firms like Concurrent, National Instruments, Boston Dynamics, SpaceX, and Tesla, just to name a few on the products side.

RedHat and National Instruments are also members of the LF collaborative Real-Time project.

JP: What are the challenges in developing a real-time subsystem or specialized kernel for Linux? Is it any different than how other projects are run for the kernel?

TG: Not really different; the same rules apply. Patches have to be posted, are reviewed, and discussed. The feedback is then incorporated. The loop starts over until everyone agrees on the solution, and the patches get merged into the relevant subsystem tree and finally end up in the mainline kernel.

But as I explained before, it needs a lot of care and effort and, often enough, a large amount of extra work to restructure existing code first to get a particular piece of the patches integrated. The result is providing the desired functionality but is at the same time not in the way of other interests or, ideally, provides a benefit for everyone.

The technology’s complexity that reaches into a broad range of the core kernel code is obviously challenging, especially combined with the mainline kernel’s rapid change rate. Even larger changes happening at the related core infrastructure level are not impacting ongoing development and integration work too much in areas like drivers or file systems. But any change on the core infrastructure can break a carefully thought-out integration of the real-time parts into that infrastructure and send us back to the drawing board for a while.

JP: Which companies have been supporting the effort to get the PREEMPT_RT Linux kernel patches upstream?

TG: For the past five years, it has been supported by the members of the LF real-time Linux project, currently ARM, BMW, CIP, ELISA, Intel, National Instruments, OSADL, RedHat, and Texas Instruments. CIP, ELISA, and OSADL are projects or organizations on their own which have member companies all over the industry. Former supporters include Google, IBM, and NXP.

I personally, my team and the broader Linux real-time community are extremely grateful for the support provided by these members.

However, as with other key open source projects heavily used in critical infrastructure, funding always was and still is a difficult challenge. Even if the amount of money required to keep such low-level plumbing but essential functionality sustained is comparatively small, these projects struggle with finding enough sponsors and often lack long-term commitment.

The approach to funding these kinds of projects reminds me of the Mikado Game, which is popular in Europe, where the first player who picks up the stick and disturbs the pile often is the one who loses.

That’s puzzling to me, especially as many companies build key products depending on these technologies and seem to take the availability and sustainability for granted up to the point where such a project fails, or people stop working on it due to lack of funding. Such companies should seriously consider supporting the funding of the Real-Time project.

It’s a lot like the Jenga game, where everyone pulls out as many pieces as they can up until the point where it collapses. We cannot keep taking; we have to give back to these communities putting in the hard work for technologies that companies heavily rely on.

I gave up long ago trying to make sense of that, especially when looking at the insane amounts of money thrown at the over-hyped technology of the day. Even if critical for a large part of the industry, low-level infrastructure lacks the buzzword charm that attracts attention and makes headlines — but it still needs support.

JP: One of the historical concerns was that Real-Time didn’t have a community associated with it; what has changed in the last five years?

TG: There is a lively user community, and quite a bit of the activity comes from the LF project members. On the development side itself, we are slowly gaining more people who understand the intricacies of PREEMPT_RT and also people who look at it from other angles, e.g., analysis and instrumentation. Some fields could be improved, like documentation, but there is always something that can be improved.

JP: What will the Real-Time Stable team be doing once the patches are accepted upstream?

TG: The stable team is currently overseeing the RT variants of the supported mainline stable versions. Once everything is integrated, this will dry out to some extent once the older versions reach EOL. But their expertise will still be required to keep real-time in shape in mainline and in the supported mainline stable kernels.

JP: So once the upstreaming activity is complete, what happens afterward?

TG: Once upstreaming is done, efforts have to be made to enable RT support for specific Linux features currently disabled on real-time enabled kernels. Also, for quite some time, there will be fallout when other things change in the kernel, and there has to be support for kernel developers who run into the constraints of RT, which they did not have to think about before.

The latter is a crucial point for this effort. Because there needs to be a clear longer-term commitment that the people who are deeply familiar with the matter and the concepts are not going to vanish once the mainlining is done. We can’t leave everybody else with the task of wrapping their brains around it in desperation; there cannot be institutional knowledge loss with a system as critical as this.

The lack of such a commitment would be a showstopper on the final step because we are now at the point where the notable changes are focused on the real-time only aspects rather than welcoming cleanups, improvements, and features of general value. This, in turn, circles back to the earlier question of funding and industry support — for this final step requires several years of commitment by companies using the real-time kernel.

There’s not going to be a shortage of things to work on. It’s not going to be as much as the current upstreaming effort, but as the kernel never stops changing, this will be interesting for a long time.

JP: Thank you, Thomas, for your time this morning. It’s been an illuminating discussion.

To get involved with the real-time kernel patch for Linux, please visit the PREEMPT_RT wiki at The Linux Foundation or email real-time-membership@linuxfoundation.org

The post In the trenches with Thomas Gleixner, real-time Linux kernel patch set appeared first on Linux.com.

]]>The post OpenTreatments Foundation: Democratizing and Decentralizing Drug Development appeared first on Linux.com.

]]>The post OpenTreatments Foundation: Democratizing and Decentralizing Drug Development appeared first on Linux.com.

]]>The post Open Source Web Engine Servo to be Hosted at Linux Foundation appeared first on Linux.com.

]]>

KubeCon, November 17, 2020 – The Linux Foundation, the nonprofit organization enabling mass innovation through open source, today announced it will host the Servo web engine. Servo is an open source, high-performance browser engine designed for both application and embedded use and is written in the Rust programming language, bringing lightning-fast performance and memory safety to browser internals. Industry support for this move is coming from Futurewei, Let’s Encrypt, Mozilla, Samsung, and Three.js, among others.

“The Linux Foundation’s track record for hosting and supporting the world’s most ubiquitous open source technologies makes it the natural home for growing the Servo community and increasing its platform support,” said Alan Jeffrey, Technical Chair of the Servo project. “There’s a lot of development work and opportunities for our Servo Technical Steering Committee to consider, and we know this cross-industry open source collaboration model will enable us to accelerate the highest priorities for web developers.”

Read more at The Linux Foundation and Read more at the Mozilla Foundation

The post Open Source Web Engine Servo to be Hosted at Linux Foundation appeared first on Linux.com.

]]>The post The state of the art of microservices in 2020 appeared first on Linux.com.

]]>James Lewis and Martin Fowler (2014) [6]

Introduction

It is expected that in 2020, the global cloud microservices market will grow at a rate of 22.5%, with the US market projected to maintain a growth rate of 27.4% [5]. The tendency is that developers will move away from locally hosted applications and shift into the cloud. Consequently, this will help businesses minimize downtime, optimize resources, and reduce infrastructure costs. Experts also predict that by 2022, 90% of all applications will be developed using microservices architecture [5]. This article will help you to learn what microservices are and how companies have been using it nowadays.

What are microservices?

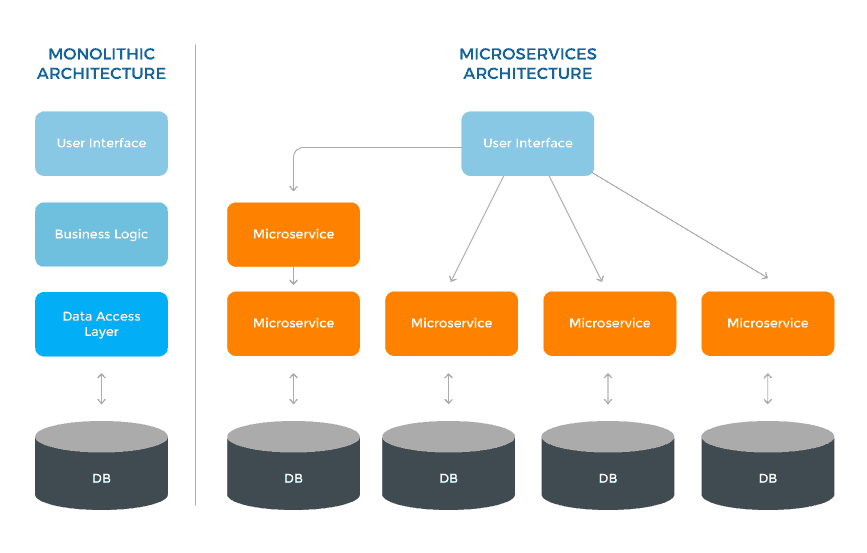

Microservices have been widely used around the world. But what are microservices? Microservice is an architectural pattern in which the application is based on many small interconnected services. They are based on the single responsibility principle, which according to Robert C. Martin is “gathering things that change for the same reason, and separate those things that change for different reasons” [2]. The microservices architecture is also extended to the loosely coupled services that can be developed, deployed, and maintained independently [2].

Moving away from monolithic architectures

Microservices are often compared to traditional monolithic software architecture. In a monolithic architecture, a software is designed to be self-contained, i.e., the program’s components are interconnected and interdependent rather than loosely coupled. In a tightly-coupled architecture (monolithic), each component and its associated components must be present in order for the code to be executed or compiled [7]. Additionally, if any component needs to be updated, the whole application needs to be rewritten.

That’s not the case for applications using the microservices architecture. Since each module is independent, it can be changed without affecting other parts of the program. Consequently, reducing the risk that a change made to one component will create unanticipated changes in other components.

Companies might run in trouble if they cannot scale a monolithic architecture if their architecture is difficult to upgrade or the maintenance is too complex and costly [4]. Breaking down a complex task into smaller components that work independently from each other is the solution to this problem.

How developers around the world build their microservices

Microservices are well known for improving scalability and performance. However, are those the main reasons that developers around the world build their microservices? The State of Microservices 2020 research project [1] has found out how developers worldwide build their microservices and what they think about it. The report was created with the help of 660 microservice experts from Europe, North America, Central and South America, the Middle East, South-East Asia, Australia, and New Zealand. The table below presents the average rating on questions related to the maturity of microservices [1].

| Category | Average rating (out of 5) |

| Setting up a new project | 3.8 |

| Maintenance and debugging | 3.4 |

| Efficiency of work | 3.9 |

| Solving scalability issues | 4.3 |

| Solving performance issues | 3.9 |

| Teamwork | 3.9 |

As observed on the table, most experts are happy with microservices for solving scalability issues. On the contrary, maintenance and debugging seem to be a challenge for them.

According to their architecture’s leading technologies, most experts reported that they use Javascript/Typescript (almost ⅔ of microservices are built on those languages). In the second place, they mostly use Java.

Although there are plenty of options to choose to deploy microservices, most experts use Amazon Web Services (49%), followed by their own server. Additionally, 62% prefer AWS Lambda as a serverless solution.

Most microservices used by the experts use HTTP for communication, followed by events and gRPC. Additionally, most experts use RabbitMQ for message-brokers, followed by Kafka and Redis.

Also, most people work with microservices continuous integration (CI). In the report, 87% of the respondents use CI solutions such as GitLab CI, Jenkins, or GitHub Actions.

The most popular debugging solution among 86% of the respondents was logging, in which 27% of the respondents ONLY use logs.

Finally, most people think that microservice architecture will become either a standard for more complex systems or backend development.

Successful use cases of Microservices

Many companies have changed from a monolithic architecture to microservices.

Amazon

In 2001, development delays, coding challenges, and service interdependencies didn’t allow Amazon to address its growing user base’s scalability requirements. With the need to refactor their monolithic architecture from scratch, Amazon broke its monolithic applications into small, independent, and service-specific applications [3][9].

In 2001, Amazon decided to change to microservices, which was years before the term came into fashion. This change led Amazon to develop several solutions to support microservices architectures, such as Amazon AWS. With the rapid growth and adaptation to microservices, Amazon became the most valuable company globally, valued by market cap at $1.433 trillion on July 1st, 2020 [8].

Netflix

Netflix started its movie-streaming service in 2007, and by 2008 it was suffering scaling challenges. They experienced a major database corruption, and for three days, they could not ship DVDs to their members [10]. This was the starting point when they realized the need to move away from single points of failure (e.g., relational databases) towards a more scalable and reliable distributed system in the cloud. In 2009, Netflix started to refactor its monolithic architecture into microservices. They began by migrating its non-customer-facing, movie-coding platform to run on the cloud as an independent microservices [11]. Changing to microservices allowed Netflix to overcome its scaling challenges and service outages. Despite that, it allowed them to reduce costs by having cloud costs per streaming instead of costs with a data center [10]. Today, Netflix streams approximately 250 million hours of content daily to over 139 million subscribers in 190 countries [11].

Uber

After launching Uber, they struggled to develop and launch new features, fix bugs, and rapidly integrate new changes. Thus, they decided to change to microservices, and they broke the application structure into cloud-based microservices. In other words, Uber created one microservice for each function, such as passenger management and trip management. Moving to microservices brought Uber many benefits, such as having a clear idea of each service ownership. This boosted speed and quality, facilitating fast scaling by allowing teams to focus only on the services they needed to scale, updating virtual services without disrupting other services, and achieving more reliable fault tolerance [11].

It’s all about scalability!

A good example of how to provide scalability is by looking at China. With its vast number of inhabitants, China had to adapt by creating and testing new solutions to solve new challenges at scale. Statistics show that China serves roughly 900 million Internet users nowadays [14]. During the 2019 Single’s Day (the equivalent of Black Friday in China), the peak transaction of Alibaba’s various shopping platforms was 544,00 transactions per second. The total amount of data processed on Alibaba Cloud was around 970 petabytes [15]. So, what’s the implication of these numbers of users in technology?

Many technologies have emerged from the need to address scalability. For example, Tars was created in 2008 by Tencent and contributed to the Linux Foundation in 2018. It’s being used at scale and enhanced for ten years [12]. Tars is open source, and many organizations are significantly contributing and extending the framework’s features and value [12]. Tars supports multiple programming languages, including C++, Golang, Java, Node.js, PHP, and Python; and it can quickly build systems and automatically generate code, allowing the developer to focus on the business logic to improve operational efficiency effectively. Tars has been widely used in Tencent’s QQ, WeChat social network, financial services, edge computing, automotive, video, online games, maps, application market, security, and many other core businesses. In March of 2020, the Tars project transitioned into the TARS Foundation, an open source microservice foundation to support the rapid growth of contributions and membership for a community focused on building an open microservices platform [12].

Be sure to check out the Linux Foundation’s new free training course, Building Microservice Platforms with TARS

About the authors:

Isabella Ferreira is an Advocate at TARS Foundation, a cloud-native open-source microservice foundation under the Linux Foundation.

Mark Shan is Chairman at Tencent Open Source Alliance and also Board Chair at TARS Foundation.

References:

[1] https://tsh.io/state-of-microservices/#ebook

[2]https://medium.com/hashmapinc/the-what-why-and-how-of-a-microservices-architecture-4179579423a9

[3] https://www.plutora.com/blog/understanding-microservices

[4] https://www.leanix.net/en/blog/a-brief-history-of-microservices

[5] https://www.charterglobal.com/five-microservices-trends-in-2020/

[6] https://martinfowler.com/articles/microservices.html#footnote-etymology

[7] https://whatis.techtarget.com/definition/monolithic-architecture

[8] https://ycharts.com/companies/AMZN/market_cap

[9] https://thenewstack.io/led-amazon-microservices-architecture/

[10] https://media.netflix.com/en/company-blog/completing-the-netflix-cloud-migration

[11] https://blog.dreamfactory.com/microservices-examples/

[13] https://medium.com/microservices-architecture/top-10-microservices-framework-for-2020-eefb5e66d1a2

[14] https://www.statista.com/statistics/265140/number-of-internet-users-in-china/

[15] https://interconnected.blog/china-scale-technology-sandbox/

This Linux Foundation Platinum Sponsor content was contributed by Tencent.

The post The state of the art of microservices in 2020 appeared first on Linux.com.

]]>The post Why CII best practices gold badges are important appeared first on Linux.com.

]]>“…a CII Best Practices badge, especially a gold badge, shows that an OSS project has implemented a large number of good practices to keep the project sustainable, counter vulnerabilities from entering their software, and address vulnerabilities when found. Projects take many such steps to earn a gold badge, and it’s a good thing to see.”

Read more at the Linux Foundation

The post Why CII best practices gold badges are important appeared first on Linux.com.

]]>